- This is the 2nd out of 4 Projects of "Data Career Accelerator:Real-World Training Program on Data and Cloud Technologies"

🎓 Certification Included 🎓

Prerequisites:

⏰ Estimated Completion Time:

Data & Cloud Engineering Project

Data Engineering Project

Automated Web Scraper for Python Job Listings on Google Cloud Platform

Introduction to GCP: End-to-End Weather API Data Pipeline

What you will learn:

🎓 Certification Included 🎓

- This is the 2nd out of 4 Projects of "Data Career Accelerator:Real-World Training Program on Data and Cloud Technologies"

🎓 Certification Included 🎓

Prerequisites:

⏰ Estimated Completion Time:

Data & Cloud Engineering Project

Data Engineering Project

Automated Web Scraper for Python Job Listings on Google Cloud Platform

Introduction to GCP: End-to-End Weather API Data Pipeline

What you will learn:

🎓 Certification Included 🎓

- This is the 2nd out of 4 Projects of "Data Career Accelerator:Real-World Training Program on Data and Cloud Technologies"

Data & Cloud Engineering Project

Automated Web Scraper for Python Job Listings on Google Cloud Platform

What you will learn:

Prerequisites:

⏰ Estimated Completion Time:

🎓 Certification Included 🎓

Upon completion of project material!

- This is the 2nd out of 4 Projects of "Data Career Accelerator:Real-World Training Program on Data and Cloud Technologies"

Data & Cloud Engineering Project

Automated Web Scraper for Python Job Listings on Google Cloud Platform

What you will learn:

Prerequisites:

⏰ Estimated Completion Time:

🎓 Certification Included 🎓

Upon completion of project material!

- This is the 2nd out of 4 Projects of "Data Career Accelerator:Real-World Training Program on Data and Cloud Technologies"

Data & Cloud Engineering Project

Automated Web Scraper for Python Job Listings on Google Cloud Platform

What you will learn:

Prerequisites:

⏰ Estimated Completion Time:

🎓 Certification Included 🎓

Upon completion of project material!

💭 Who is this project for?

This project is perfect for many tech professionals, from beginners to experienced engineers looking to explore data and cloud technologies. Whether you're looking to add impressive projects to your portfolio or starting your first project in the cloud, this project is designed just for you:

#1 Aspiring

Data Engineers & Python Developers

If you're starting your tech career or enhancing your programming skills, this project offers a standout opportunity. Most beginners don't know how to deploy web scrapers in a production environment. By building and managing a production-grade web scraper on Google Cloud, you'll distinguish yourself from other candidates. This practical, scalable project experience can help you land your first full-time job.

#2 Machine Learning and Software Engineers

#3 Experienced Cloud and BI Engineers

#4 Career Switchers to Tech

💭 Who is this project for?

This project is perfect for many tech professionals, from beginners to experienced engineers looking to explore data and cloud technologies. Whether you're looking to add impressive projects to your portfolio or starting your first project in the cloud, this project is designed just for you:

#1 Aspiring Data Engineers & Python Developers

If you're starting your tech career or enhancing your programming skills, this project offers a standout opportunity. Most beginners don't know how to deploy web scrapers in a production environment. By building and managing a production-grade web scraper on Google Cloud, you'll distinguish yourself from other candidates. This practical, scalable project experience can help you land your first full-time job.

#2 Machine Learning &

Software Engineers

For Machine Learning and Software Engineers, this project is an excellent chance to expand your expertise into data collection and cloud infrastructure management. It's perfect for professionals eager to learn about data pipelines for machine learning models or those interested in automating web data interactions. This knowledge is crucial for integrating robust data handling into your development processes.

#3 Experienced Cloud & BI Engineers

If you're proficient in cloud technologies or business intelligence, this project will broaden your capabilities by introducing advanced data processing and automation in Google Cloud. Learning to implement automated web scraping and task scheduling with Cloud Run and Cloud Scheduler will enhance your role and pave the way for new career prospects.

#4 Career Switchers to Tech

If you're transitioning into tech from fields like finance, marketing, or science, this project is your gateway. It offers hands-on experience with coding, data handling, and cloud deployment, equipping you with the skills needed for tech roles. This project ensures you gain the confidence to tackle technical tasks and discussions, positioning you well for roles in data and cloud engineering.

What's included?

💎 Real-World Portfolio Project:

"Automated Web Scraper for Python Job Listings on Google Cloud Platform"

🎁 Bonuses Included with Every Enrollment:

What's included?

💎 Real-World Portfolio Project:

"Automated Web Scraper for Python Job Listings on Google Cloud Platform"

🎁 Bonuses Included with Every Enrollment:

What Our Students Say ❤️

What Our Students Say ❤️

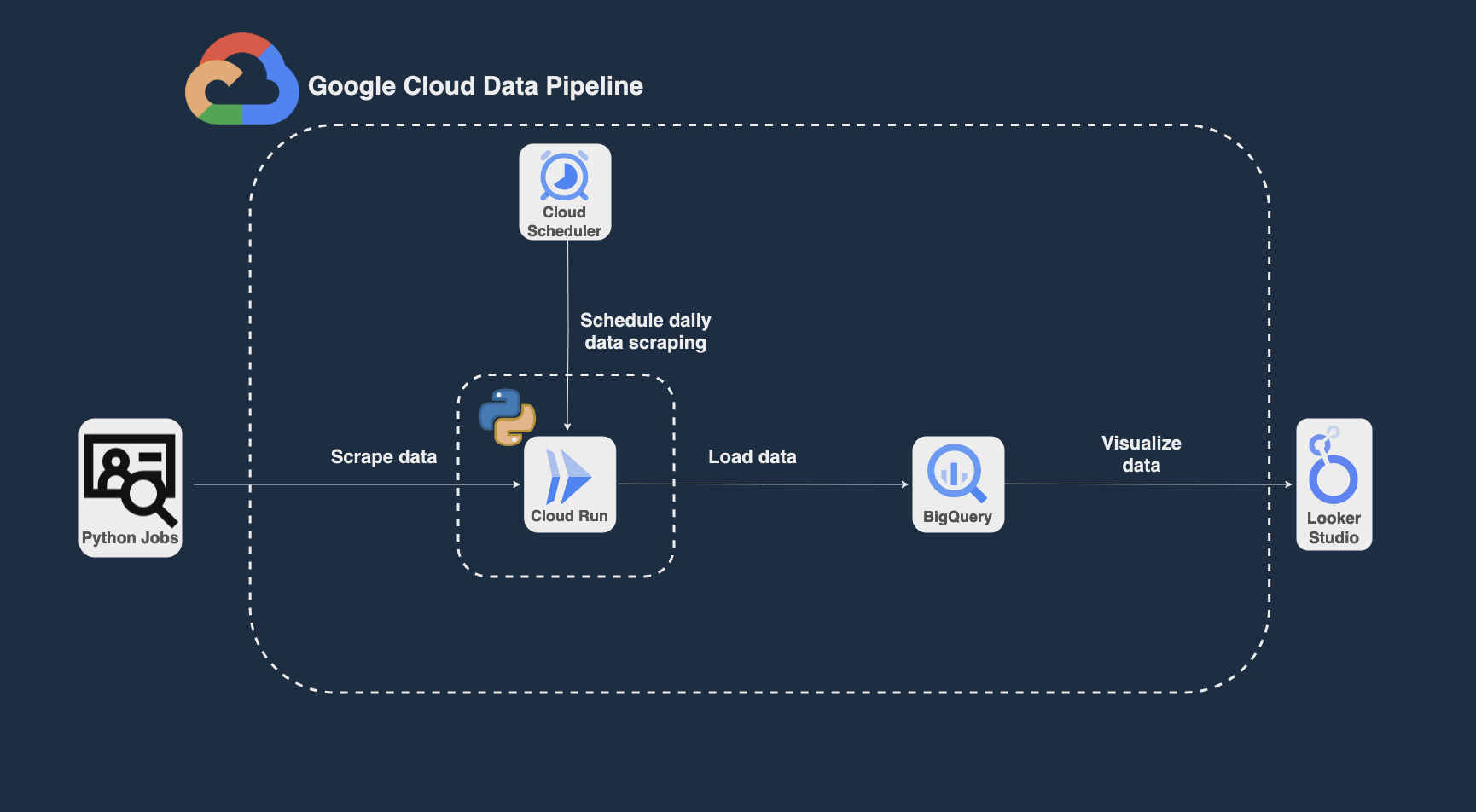

Project architecture diagram:

Project Workflow

Take your career to the next level

with better job opportunities and skills!

Project Material

📝 Educational Text Material

▶️ Comprehensive Video Lectures

💯 Assessments & Quizes

🎓 Certificate of Completion

Project Material

📝 Educational Text Material

We provide comprehensive step-by-step tutorials designed to guide you through building and deploying your project on the cloud. You'll receive detailed instructions for uploading your project to GitHub and gain access to common interview questions and answers related to the project. These resources are tailored to enhance your understanding and boost your job preparation effectively.

▶️ Comprehensive Video Lectures

For a more immersive learning experience, we offer video tutorials whenever needed. For instance, you can learn to build an interactive dashboard by closely following our step-by-step video lessons, making complex concepts easier to grasp and apply.

💯 Assessments & Quizes

Validate your grasp of the technologies and their strategic application with carefully designed assessments and quizzes integrated within the course structure.

🎓 Certificate of Completion

Upon successful completion of the course, you will be awarded a professional Certificate of Completion. Showcase your accomplishment on platforms like LinkedIn and other social networks.

Mike Chionidis

👋 I'm Mike, a Freelance Data & AI Engineer based in Greece.